LANGUAGE-GAMES AS A FOCAL NOTION IN LANGUAGE THEORY

Abstract

Wittgenstein introduced the language game idea to emphasize that language use is a rule-governed activity. But how do rules govern our linguistic behavior? After a long search, Wittgenstein decided in May 1941 that the meaning relations are constituted of entire language-games that are primary in relation to their rules. A language game is not defined by its rules. To understand the rules one has to master the game.

An application of the mathematical theory of games to language-games led to game-theoretical semantics, which is the true conceptual basis of logic and mathematics. It has prompted important new developments in logic and in the foundations of mathematics, including more relaxed concept of probability. In the light of game-theoretical semantics, the logic that Frege formulated and that was canonized as traditional first-order logic is flawed in the sense of being too poor conceptually. This flaw is being corrected by the development of independence-friendly logic and its extensions.

Game-theoretical semantics can also help to integrate syntax and semantics. Rules for a move in a semantical game (language game) typically involve both an action on the part of a “player” and also a syntactical transformation rule to indicate what the next move will be like. Those transformations are all the syntax that is needed in an overall language theory. They are but an aspect of the semantical game rules.

In these days, if I mention language-games, the first association of my audience is likely to be Wittgenstein. But what did Ludwig think about language-games? One reason why it is fascinating to study Wittgenstein’s thought is that his notebooks, his philosophical diaries enable us to follow the dynamics of his development, almost re-living his line of thought. So let us fly our time-machine to the late May 1941. What we find is Wittgenstein in an agonizing crisis. Usually he writes only a couple of sentences daily in his diary. Now he writes in the three days (May 23–26) something like fifteen pages. He writes a sentence, strikes it over, tries to write a better one, corrects it or offers an alternative formulation and so on.

But what is he so excited about? Bertrand Russell once asked Wittgenstein whether he was thinking about logic or about his sins. Wittgenstein replied: “Both”. In those May days (maydays?) he would not have given either answer. But he could have said “meaning” or “meaning and language-games”. Wittgenstein was struggling with one of the main problems of all language theory. What are the meaning relations that enable us not only to understand a name or a sentence but to use language in our transactions with reality? How are the expressions of our language connected with the world?

In his Tractatus, Wittgenstein had defended an answer that in its simplest form amounts to saying: by picturing. Propositions are pictures of possible states of affairs and names represent objects by sharing the same logical form. By 1929 he nevertheless had given up this idea of language as a mirror of a (possible) world and replaced it by a vision of language as a rule-governed human activity (or, rather, a complex of such activities). This puts the main theoretical onus on the notion of rule. But what is a rule? Wittgenstein rejected in October 1929 phenomenological languages as our actual basis language in favor of physicalistic language. Hence rules must operate through their actual symbolic expressions, written instructions, formulas, samples and suchlike. But how can such external objects in Wittgenstein’s vivid words “dead” objects guide my decisions? His problem was not how to follow a rule, but how a rule can guide my actions. This is not a problem about us humans only. How does a rule, for instance a blueprint guide a machine? Wittgenstein did not ask: does a computer think? but: does a computer compute?

Wittgenstein pondered on such questions from 1933 on. In May 1941 he realized that he was not making any real progress. We do not need rules as an explanatory notion, he came to think. We can understand and describe exhaustively a person’s actions and other behavior without referring to rules as separate entities. On the contrary, we can understand the rules one is following only by reference to the complex of interrelated activities that it is a part of, “Language-games” is but a label to those activities. Thus Wittgenstein’s conceptual priorities switched. He came to believe that in the last analysis one cannot define a game by its rules. One can understand a rule only via the game that it is an aspect of. Rules became conceptually secondary in relation to their language games.

This, then is Wittgenstein’s (perhaps we should say, the third Wittgenstein’s) answer to the question of what the links are that connects language with reality: The links are language-games. This already is enough to make them a focal notion in the study of language.

This may be a truth and nothing but the truth, but it is not the whole truth about language or about language-games. Wittgenstein’s own account of language-games is unsatisfactory, or at least incomplete and impressionistic. One source of dissatisfaction with it is that Wittgenstein does not ever give fully worked-out large-scale examples of intrinsically interesting language games. In particular, he does not explain what the language-games are that lend our logical and mathematical words their meaning, in spite of having himself used logic and mathematics as testing-grounds of rule-following. But Wittgenstein aside what are the language games like give logic and mathematics their meaning? Remarkably Wittgenstein need not have construct such games himself. He may have been the first one to use the term “language-game”, but highly interesting language-games had been examined by an earlier thinker. As Risto Hilpinen was the first one to point out, the real inventor of language games was no other than one of the fathers of semiotics, Charles Sanders Peirce.

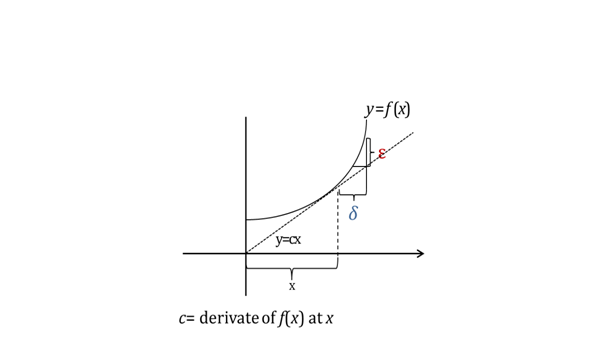

The games he was particularly interested in were precisely the ones that are involved (“played”) in logic and in its uses in mathematics. One can get an

idea of what they are from the so-called epsilon delta technique of calculus

texts. For instance, the definition of the continuity

of a function ![]() at

at ![]() might be read: Given any

might be read: Given any ![]() , however small, one can find

, however small, one can find ![]() such that

such that ![]() as soon as

as soon as ![]() . Here the locution “one can

find” does precisely the same job as the existential quantifier

. Here the locution “one can

find” does precisely the same job as the existential quantifier ![]() and the force of a “given” is

that of a universal one. In general, the logical home

of one basic logical notions, quantifiers, “every” and “there exists” (“some”)

are the language-games of seeking and finding. Intuitively, in the associated picture we can find an

arbitrarily small

and the force of a “given” is

that of a universal one. In general, the logical home

of one basic logical notions, quantifiers, “every” and “there exists” (“some”)

are the language-games of seeking and finding. Intuitively, in the associated picture we can find an

arbitrarily small ![]() no matter what

no matter what ![]() is given. As an illustration,

you can contemplate the following figure that displays the epsilon-delta definition of derivative.

is given. As an illustration,

you can contemplate the following figure that displays the epsilon-delta definition of derivative.

What us going on is that you can make ![]() small as you wish by pushing

small as you wish by pushing ![]() close enough to

close enough to ![]() .

.

Several questions arise here. Are we really talking here of genuine games? Can linguist or semiotician actually recognize such a game when it is being played? As an answer, imagine watching a bunch of children moving around. Surely from their behavior you can find out that they are playing hide-and-seek.

Perhaps you still feel like asking, as the late Erik Stenius once asked me: How can the ideas of seeking and finding help to constitute our notion of existence, codified in the existential quantifier? I replied: Erik, in your native Swedish language, how do you express existence? You say, “det finns”, literally “one can find”. And Swedish is not the only language to do essentially the same.

I will not try to examine in detail how Peirce used this language-game idea. It was for Peirce originally a semiotic rather than logical idea. Even though there are several important connections between the game idea and Peirce’s different treatments of logic, he never quite integrated it with the rest of his logical theory, certainly not into a formal system of the same rigid structure as Frege’s contemporary logic.

Why not? One of the first ideas that occurred to me when I internalized Wittgenstein’s notion of language-game was: this opens vast theoretical opportunities. Wittgenstein’s contrary view notwithstanding, “games” are not so called by mere family resemblance. There exists an explicit, fully developed mathematical theory of games, launched by John von Neumann, Borel, and Morgenstern. We can now use it in language theory, perhaps more its concepts than its results. Language-games can be thought of literally as games in the sense of von Neumann’s mathematical theory of games. The outcome is the fundamental semantical theory of logic known as game-theoretical semantics. Even though it is not generally used yet, it is superior to the usual Tarski-type semantics. The truth of a sentence S can be defined in it by a reference to the associated game G(S) of attempted verification. But truth does not mean a verifier’s winning a play of G(S). It means the existence of a (sure-win) strategy to the verifier.

So why did Peirce not develop an explicit game-theoretical semantics? An answer, or at least an important part of an answer, lies in the very nature of game theory. “Game-theory” can even be claimed to be misnamed. It applies to activities we do not call games (“games against nature”). The intended “games” are not all competitive, either. The key notion of this theory is not “game” but “strategy”. It could more accurately be called strategy theory rather than game theory. And strategies can be strategies of cooperation rather than strategies in a conflict situation.

Game theory was born, I am tempted to say, at the moment von Neumann (or

was it Borel?) introduced the general notion of strategy. Unfortunately, that

happened after Peirce, who therefore could not avail the notion of strategy.

This deprived him of the main concept needed to

regiment the use of the game idea. This is seen particularly clearly in the

games that serve as the semantical basis of logic. They are stereotypical real

or fictional attempts to find a fragment of the world in which a sentence ![]() is true. As was pointed out, the

idea of language game enables us to define explicitly the crucial semantical

concept of truth. This is not possible to do to ones’ own language if we are using the common Tarski-type semantics, as

Tarski’s famous impossibility result suggests. This impossibility result,

though applicable to the kind of language Tarski used, does not concern

suitably richer languages. Tarski’s famous theorem accordingly

has little philosophical or semiotic interest.

is true. As was pointed out, the

idea of language game enables us to define explicitly the crucial semantical

concept of truth. This is not possible to do to ones’ own language if we are using the common Tarski-type semantics, as

Tarski’s famous impossibility result suggests. This impossibility result,

though applicable to the kind of language Tarski used, does not concern

suitably richer languages. Tarski’s famous theorem accordingly

has little philosophical or semiotic interest.

There are plenty other opportunities opened up by various applications of game-theoretical semantics. One of the first questions that a game theorist would ask about semantical games is: Are they games of perfect information or not? In other words, does a player making a move always know what earlier moves have been made? At first sight, informationally imperfect games might seem to be impossible or at least unrealistic. They are nevertheless instantiated by card games like bridge in which a player does not know other player’s hands. Such games can be handled conceptually as interpreting a “player” as a team like partners at bridge that not share their information. They do not know what cards their partner has.

What about the games that are the semantical home of logic and

mathematics? It turns out that Frege’s logic involves a tacit assumption of

perfect information. This assumption has turned out to be a vicious mistake.

Language-games with imperfect information might seem

to be exotic animals, but in reality you can find them all over the place. For

instance, in the mathematical example about continuity, ![]() is obviously chosen knowing

is obviously chosen knowing ![]() . But is it chosen knowing x or not? In

the usual notion of continuity, a dependence of

. But is it chosen knowing x or not? In

the usual notion of continuity, a dependence of ![]() on

on ![]() and not only

and not only ![]() is assumed. But if we do not assume it,

we obtain a different notion called uniform

continuity. Such uniformity concept play an important role in mathematics.

is assumed. But if we do not assume it,

we obtain a different notion called uniform

continuity. Such uniformity concept play an important role in mathematics.

Frege’s tacit assumption of perfect information imposed a heavy restriction on what his logic can do. Later logicians followed his example, with the result that for more than a century logicians used unnecessarily weak logic. In this respect, Peirce was far ahead of Frege. Not only was he aware of the nature of uniformity concepts and even occasionally corrected professional mathematicians when they made a mistake. This makes a big difference to how we look upon the entire history of modern logic. Usually Frege is considered as the architect of the basic part of our contemporary logic referred to as quantification theory or first-order logic. While Peirce is relegated to the role of a slightly later co-discoverer, in reality, what Peirce had in mind was a far richer logic than what Frege and following him practically all other logicians have been using for more than hundred years. Only in the nineteen-nineties was the flaw in Frege’s and his followers’ logic pointed out and partly corrected in what is misleadingly labelled independence-friendly (IF) logic.

The notion of language-game is thus operative in putting several main issues to a new light. One more such potential change of approach still remains to be pointed out. It concerns the relation of syntax and semantics. The two are in our day and age usually considered as separate studies. Here semioticians are doing better than linguists or analytical philosophers of language. For one thing, there is the famous Chomskian thesis of the autonomy of syntax. Generative grammarians maintain that one can construct a complete syntax for a (natural) language without appealing to semantical considerations.

This may in fact be a reasonable approximation to syntax, even though there are no general theoretical reasons for such a complete autonomy. I once offered a small concrete example of syntactical regularities in English that have only a semantical explanation. The example was the distribution of the English quantifier word “any”. Even though this is only a miniscule and undoubtedly exceptional detail in the overall English syntax, the issue was felt to be so significant that Noam Chomsky himself paid me the enormous tribute of criticizing my note in public. (He does not bother to criticize unclear or unimportant ideas.)

Even if Chomsky were right, the relation of semantics to syntax would be a major problem. The history of Chomsky’s own views illustrate the issues. At an early stage of his views, the possibility of generating syntactically a sentence in two different ways was supposed to explain its ambiguity, as in example like

(1) Visiting relatives can be boring.

Later, the hypothesis of deep structure was proposed. It was proposed, not so much by Chomsky himself as by some of his associates that the syntactical generation of a sentence happens in two stages. First, phrase structure rules generate the so-called deep structure. This deep structure is then subjected to various transformations in the technical syntactical sense. The crucial assumption is that the semantic interpretation of the sentence operates on the deep structure. Transformations were assumed not to affect meaning.

This deep structure theory soon proved inadequate. In the light of hindsight, linguists ought to have realized that the deep structure could match the logical form of sentences and that transformations like the passive transformation could change meaning. For instance, the sentences

(2) Someone can beat anyone.

(3) Anyone can be beaten by someone.

do not mean the same.

Later, generative grammarians gave up the deep structure idea and rehearsed other linguistic structures for the role of a logical or semantical structure.

At one point, Chomsky thought that the logical forms of natural-language sentences might be captured by the formulas of first-order logic. This is a mistake. In its usual formalization, first-order logic operates with quantifiers with variables bound to them. In contrast, natural languages (and some logical languages) operate with choice terms. There is a certain irony here. Choice terms were called denoting phrases by Bertrand Russell who sought to dispense them by reducing them to a quantifiers notation.

Game-theoretical semantics offers a solution to the problem of semantical structure. The fact admittedly is that the

semantical meaning of a sentence (its interpretation) has to be gathered from

syntactical clues. Wittgenstein once wrote: “If you ask me how you can

understand what I say, when all that you have are my symbols, then I ask you, how can I understand what I say, when all that I

have are my symbols”. In a semantical game, the next move is determined by the

sentence that the players are considering at the time of the move. For

instance, if the sentence governing a move is ![]() , then the verifier must try to

choose an individual that satisfies

, then the verifier must try to

choose an individual that satisfies ![]() . The newly introduced

. The newly introduced ![]() is then sentence governing the

next move. But that sentence

is then sentence governing the

next move. But that sentence ![]() is not the initial sentence of

the game. It is the sentence considered in the game

at the time, and that sentence changes at each move syntactically and not only

semantically. In our example it changes from

is not the initial sentence of

the game. It is the sentence considered in the game

at the time, and that sentence changes at each move syntactically and not only

semantically. In our example it changes from ![]() to

to ![]() . Rules for moves in a semantical

game must accordingly have a syntactical component, rules for forming the new

sentence to be considered at the next move.

. Rules for moves in a semantical

game must accordingly have a syntactical component, rules for forming the new

sentence to be considered at the next move.

Those rules move from compound sentences to simpler ones, the end-points being atomic sentences or their negations. If these rules are inverted so as to operate from the simpler to the more complicated and newly introduced constants are replaced by variables, they become rules for constructing the earlier sentences from later ones. Such rules can now be taken the rules of a generative grammar of the language in question. This grammar would be all that a language user needs to know in order to construct or to understand a sentence. More specifically, the whole syntax of the language or language fragment in question is captured by the syntactical part of those inverted game rules. This integrates syntax and semantics completely. Among other things, it shows what the syntactical clues are that direct a language user’s semantical choices.

For instance a semantical game might start with the sentence

(4) Tom is not the only man who loves his wife.

As you can see, this is ambiguous. The ambiguity is brought out by the negation of (4)

(5) Tom is the only man who loves his wife.

(Negation rule changes the respective roles of the verifier and the falsifier.)

(6) Tom loves his wife, while no one else does it.

(Game rule paraphrasing (5).)

(7) Tome loves his wife.

(Conjuntion rule from (6).)

(8) No one else loves his wife.

(Same conjunction rule. Both outcomes of this rule will be considered in what follows.)

(9) Someone else loves his wife.

(Negation rule applied to (8).)

(10) Dick is not Tom, and Dick loves his wife.

(Existential instantiation. Intuitively, the verifier finds Dick.) From (10) the game may move to either of the following:

(11) Dick is not Tom, and Dick loves Dick’s wife.

(12) Dick is not Tom and Dick loves Tom’s wife.

(These are obtained by a game rule for dealing with (i.e. interpreting) personal pronouns.)

If we replace “Dick” by a variable, the reverse of either sequence from (12) or (11) back to (4) might be part of a syntactical production of (4), not unlike Chomsky’s two ways of generating (1).

This is an illustration of how rules for semantical games can operate both as rule for syntactical generation and as rules of semantical interpretation.

Such a unification of syntax and semantics offers several further

advantages for linguistic theory formation. An interesting advantage is that we do not now have to locate the features

that prompt the application of a game rule in the initial sentence, but only

the sentence that is being considered at the time of the application of the

game rule in question. For instance, we do not have

to have a rule telling how to introduce the second individual constant by

looking only at ![]() . This applies also for instance

to syntactical rules like rules for reflexive pronouns.

The use anaphoric pronoun like “myself” is obligatory when it occurs in the

same clause as its head, however that clause need not occur in the initial

sentence, but in the sentence considered by the players at the stage at which a

rule is applied. For instance a semantical game might

lead the players from

. This applies also for instance

to syntactical rules like rules for reflexive pronouns.

The use anaphoric pronoun like “myself” is obligatory when it occurs in the

same clause as its head, however that clause need not occur in the initial

sentence, but in the sentence considered by the players at the stage at which a

rule is applied. For instance a semantical game might

lead the players from

(13) I saw a snake near me.

to

(14) I saw a snake on the lawn, and the lawn was near me.

Why is “myself” not obligatory in (13)? Because the first-person pronoun

needs to be “interpreted” only in ![]() , not yet in the initial sentence

(13).

, not yet in the initial sentence

(13).

A general advantage is that the integrated theory vindicates Chomsky’s ingenious early idea that a sentence derivably syntactically in two different way may for that very reason be semantically ambiguous. (See e.g. (8) above.)

Even more generally, we can see that anaphora is not a matter of coreference, but of the dependence of semantical rule application on each other. This is illustrated by a plethora of examples

(15) There is nobody there, for he would have had to climb over the barbed-wire fence.

(16) I drive a Volvo. They are safe cars.

(17) A couple was sitting on the bench. Suddenly she got up.

In none of these examples is the anaphoric pronoun in any natural sense coreferential with its head. Instead, the semantical interpretation of the anaphoric pronoun depends on that of its head. (Would one utter (17) on the proverbial isle of Lesbos?)

So far I have examined primarily such language-games as can be illustrated by means of games of seeking and finding. There are nevertheless other game-like activities including involving language that can also be called language games. Formal deduction can be considered as such a game. An important case in point are games of questions and answers. Such games are especially useful in epistemology. Indeed, any new item of information that enters into one’s reasoning can be thought of as an answer to an explicit or implicit question.

In epistemology, the nature of game theory as a strategy theory becomes crucial. In philosophical epistemology the main attention has been devoted to the evaluation of information that we already have, at the expense of the study of the ways of finding new truths. A study of the strategies of questioning is the best way of correcting this bias. The questioning games offer too large an embarrassment of riches to be discussed in detail here.

Leibniz once wrote that human nature reveals itself in games. Perhaps we can say that the nature of language is best revealed in games. I suspect that Peirce might have agreed with me.

Postscript: In order to avoid misunderstanding, I want to make it clear that game-theoretical semantics and IF logic are established branches of logical studies, but that the interpretation of syntax and semantics envisaged toward the end of this paper is at the present time only a project.

Literature

Many of the topics dealt with in this lecture are examined in my earlier work, including the following books:

HINTIKKA, K.J.J., 1985, Anaphora and Definite Descriptions (with Jack Kulas), D. Reidel, Dordrecht

HINTIKKA, K.J.J., 1991, On the Methodology of Linguistics (with Gabriel Sandu), Basil Blackwell, Oxford

HINTIKKA, K.J.J., 1998, Paradigms for Language Theory, Kluwer Academic, Dordrecht

HINTIKKA, K.J.J., 2007, Socratic Epistemology, Cambridge University Press, Cambridge